The LLM Context Tax: Best Tips for Tax Avoidance

The Context Tax is what you pay when you fill your context window with useless tokens. And in that case, taxation is theft.

Every token you send to an LLM costs money. Every token increases latency. And past a certain point, every additional token makes your agent dumber. This is the triple penalty of context bloat: higher costs, slower responses, and degraded performance through context rot, where the agent gets lost in its own accumulated noise.

Context engineering is very important. The difference between a $0.50 query and a $5.00 query is often just how thoughtfully you manage context. Here’s what I’ll cover:

Stable Prefixes for KV Cache Hits - The single most important optimization for production agents

Append-Only Context - Why mutating context destroys your cache hit rate

Store Tool Outputs in the Filesystem - Cursor’s approach to avoiding context bloat

Design Precise Tools - How smart tool design reduces token consumption by 10x

Clean Your Data First (Maximize Your Deductions) - Strip the garbage before it enters context

Delegate to Cheaper Subagents (Offshore to Tax Havens) - Route token-heavy operations to smaller models

Reusable Templates Over Regeneration (Standard Deductions) - Stop regenerating the same code

The Lost-in-the-Middle Problem - Strategic placement of critical information

Server-Side Compaction (Depreciation) - Let the API handle context decay automatically

Output Token Budgeting (Withholding Tax) - The most expensive tokens are the ones you generate

The 200K Pricing Cliff (The Tax Bracket) - The tax bracket that doubles your bill overnight

Parallel Tool Calls (Filing Jointly) - Fewer round trips, less context accumulation

Application-Level Response Caching (Tax-Exempt Status) - The cheapest token is the one you never send

Why Context Tax Matters

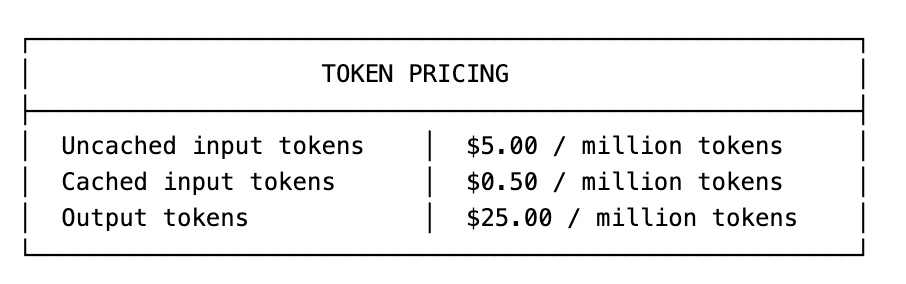

With Claude Opus 4.6, the math is brutal:

That’s a 10x difference between cached and uncached inputs. Output tokens cost 5x more than uncached inputs. Most agent builders focus on prompt engineering while hemorrhaging money on context inefficiency.

In most agent workflows, context grows substantially with each step while outputs remain compact. This makes input token optimization critical: a typical agent task might involve 50 tool calls, each accumulating context.

The performance penalty is equally severe. Research shows that past 32K tokens, most models show sharp performance degradation. Your agent isn’t just getting expensive. It’s getting confused.

Stable Prefixes for KV Cache Hits

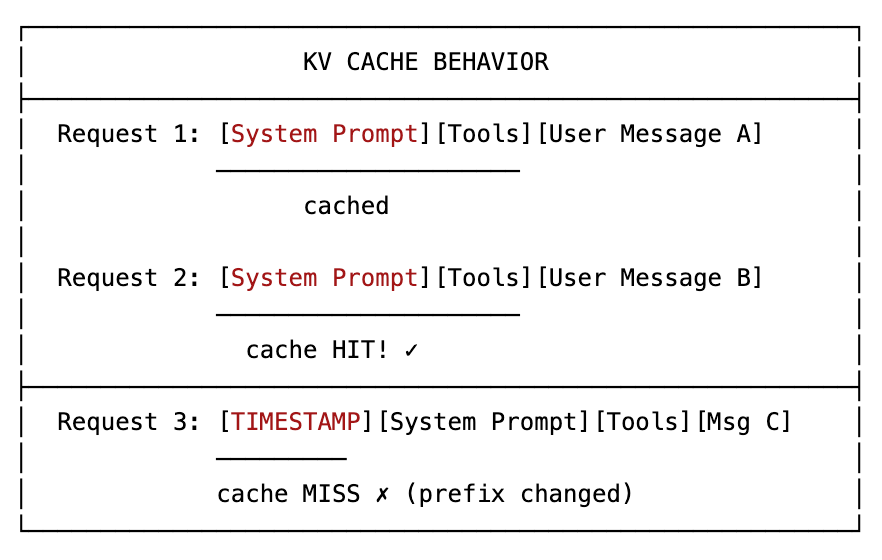

This is the single most important metric for production agents: KV cache hit rate.

TheManus team considers this the most important optimization for their agent infrastructure, and I agree completely. The principle is simple: LLMs process prompts autoregressively, token by token. If your prompt starts identically to a previous request, the model can reuse cached key-value computations for that prefix.

The killer of cache hit rates? Timestamps.

A common mistake is including a timestamp at the beginning of the system prompt. It’s a simple mistake but the impact is massive. The key is granularity: including the date is fine. Including the hour is acceptable since cache durations are typically 5 minutes (Anthropic default) to 10 minutes (OpenAI default), with longer options available.

But never include seconds or milliseconds. A timestamp precise to the second guarantees every single request has a unique prefix. Zero cache hits. Maximum cost.

Move all dynamic content (including timestamps) to the END of your prompt. System instructions, tool definitions, few-shot examples, all of these should come first and remain identical across requests.

For distributed systems, ensure consistent request routing. Use session IDs to route requests to the same worker, maximizing the chance of hitting warm caches.

Append-Only Context

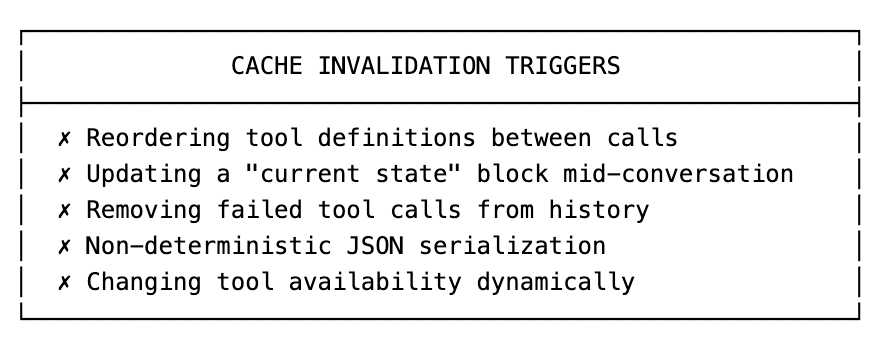

Context should be append-only. Any modification to earlier content invalidates the KV cache from that point forward.

This seems obvious but the violations are subtle:

The tool definition problem is particularly insidious. If you dynamically add or remove tools based on context, you invalidate the cache for everything after the tool definitions.

Manus solved this elegantly: instead of removing tools, they mask token logits during decoding to constrain which actions the model can select. The tool definitions stay constant (cache preserved), but the model is guided toward valid choices through output constraints.

For simpler implementations, keep your tool definitions static and handle invalid tool calls gracefully in your orchestration layer.

Deterministic serialization matters too. Python dicts don’t guarantee order. If you’re serializing tool definitions or context as JSON, use sort_keys=True or a library that guarantees deterministic output. A different key order = different tokens = cache miss.

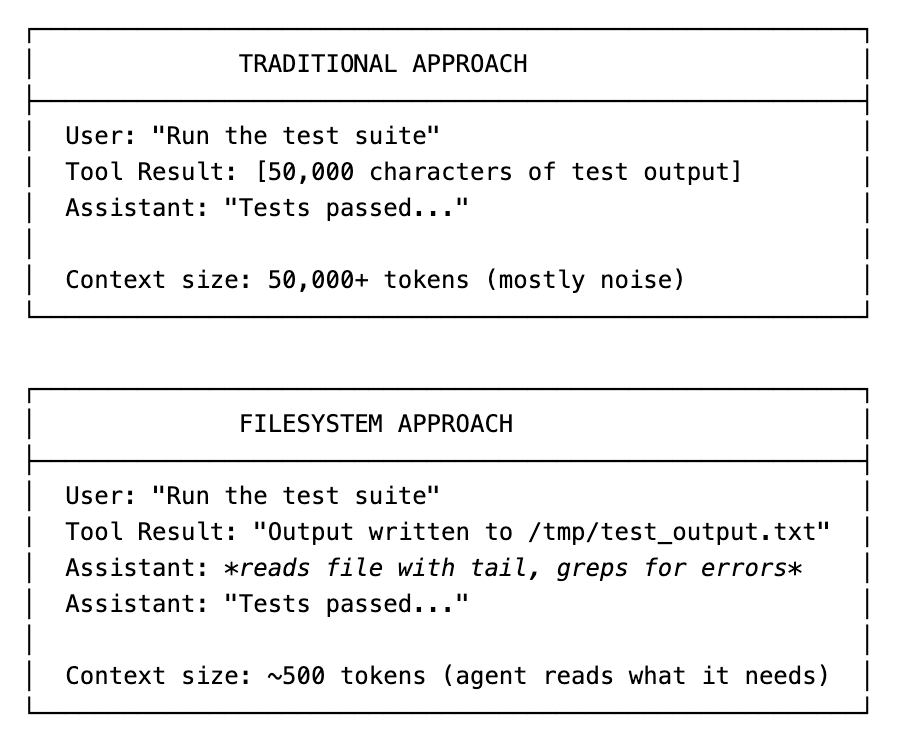

Store Tool Outputs in the Filesystem

to context management changed how I think about agent architecture. Instead of stuffing tool outputs into the conversation, write them to files.

In their A/B testing, this reduced total agent tokens by 46.9% for runs using MCP tools.

The insight: agents don’t need complete information upfront. They need the ability to access information on demand. Files are the perfect abstraction for this.

We apply this pattern everywhere:

Shell command outputs: Write to files, let agent tail or grep as needed

Search results: Return file paths, not full document contents

API responses: Store raw responses, let agent extract what matters

Intermediate computations: Persist to disk, reference by path

When context windows fill up, Cursor triggers a summarization step but exposes chat history as files. The agent can search through past conversations to recover details lost in the lossy compression. Clever.

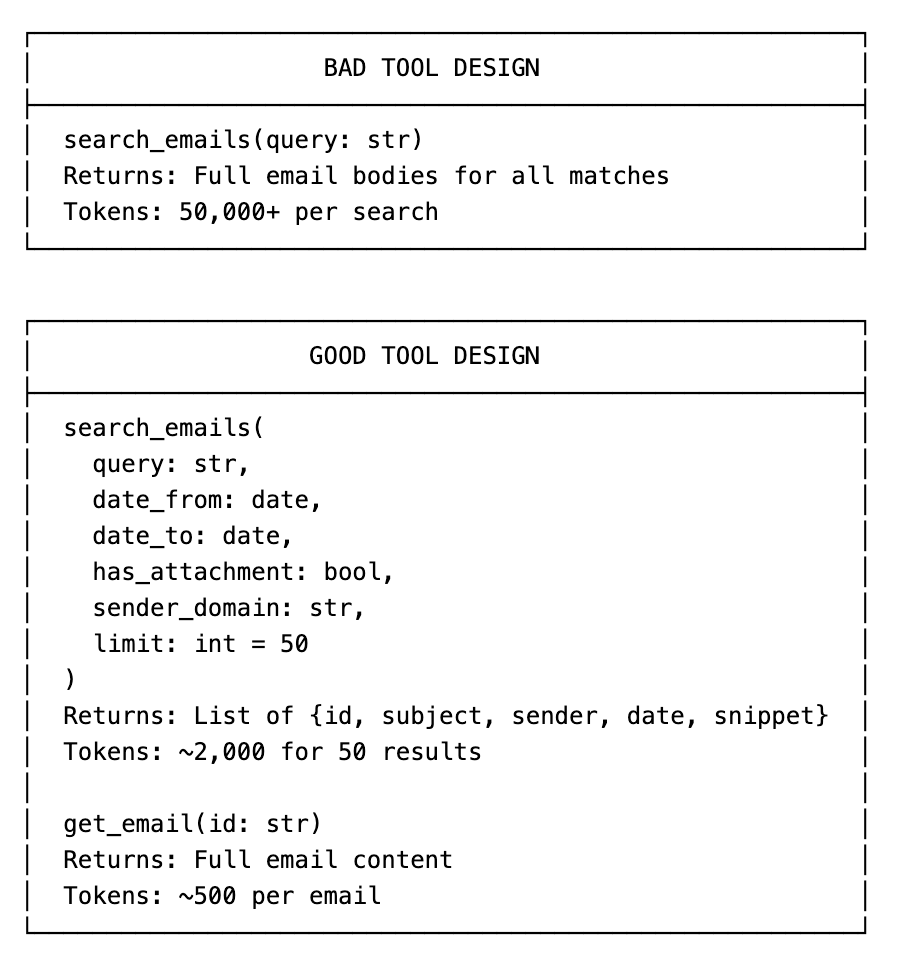

Design Precise Tools

A vague tool returns everything. A precise tool returns exactly what the agent needs.

Consider an email search tool:

The two-phase pattern: search returns metadata, separate tool returns full content. The agent decides which items deserve full retrieval.

This is exactly how our conversation history tool works at Fintool. It passes date ranges or search terms and returns up to 100-200 results with only user messages and metadata. The agent then reads specific conversations by passing the conversation ID. Filter parameters like has_attachment, time_range, and sender let the agent narrow results before reading anything.

The same pattern applies everywhere:

Document search: Return titles and snippets, not full documents

Database queries: Return row counts and sample rows, not full result sets

File listings: Return paths and metadata, not contents

API integrations: Return summaries, let agent drill down

Each parameter you add to a tool is a chance to reduce returned tokens by an order of magnitude.

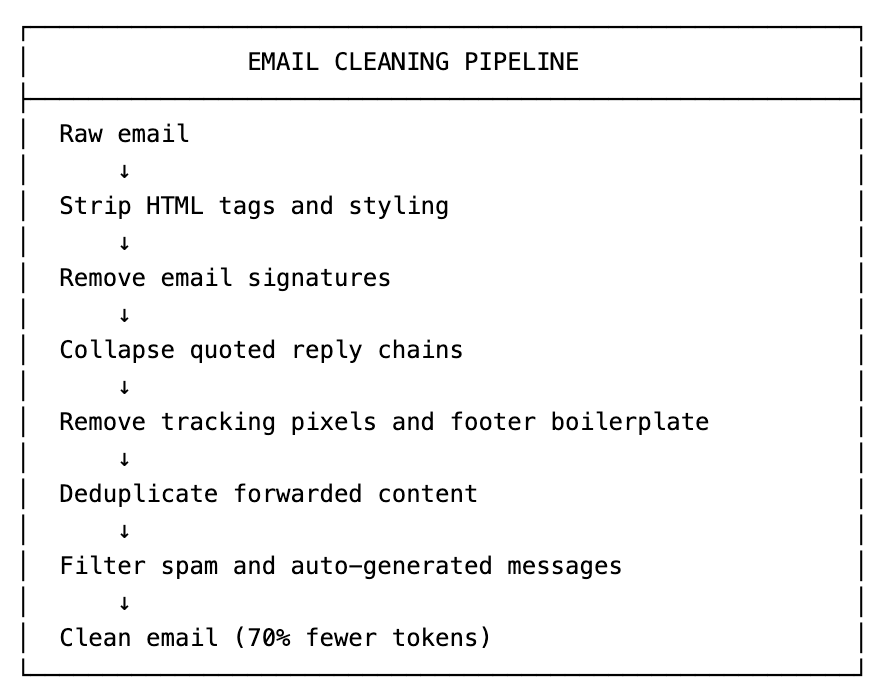

Clean Your Data First (Maximize Your Deductions)

Garbage tokens are still tokens. Clean your data before it enters context.

For emails, this means:

For HTML content, the gains are even larger. A typical webpage might be 100KB of HTML but only 5KB of actual content. CSS selectors that extract semantic regions (article, main, section) and discard navigation, ads, and tracking can reduce token counts by 90%+.

Markdown uses significantly fewer tokens than HTML

, making conversion valuable for any web content entering your pipeline.

For financial data specifically:

Strip SEC filing boilerplate (every 10-K has the same legal disclaimers)

Collapse repeated table headers across pages

Remove watermarks and page numbers from extracted text

Normalize whitespace (multiple spaces, tabs, excessive newlines)

Convert HTML tables to markdown tables

The principle: remove noise at the earliest possible stage, not after tokenization. Every preprocessing step that runs before the LLM call saves money and improves quality.

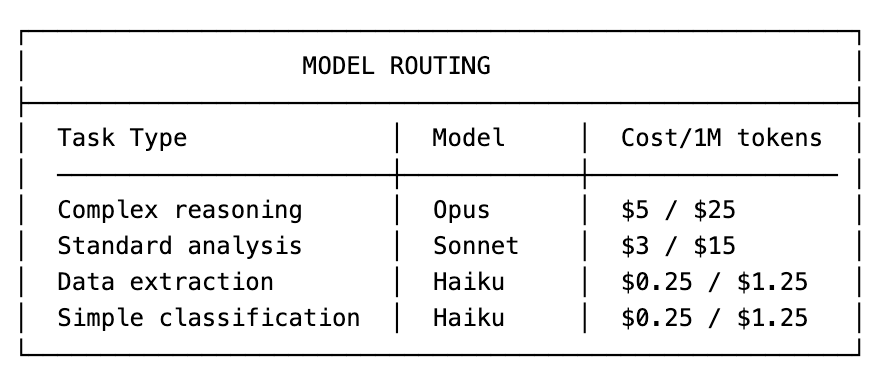

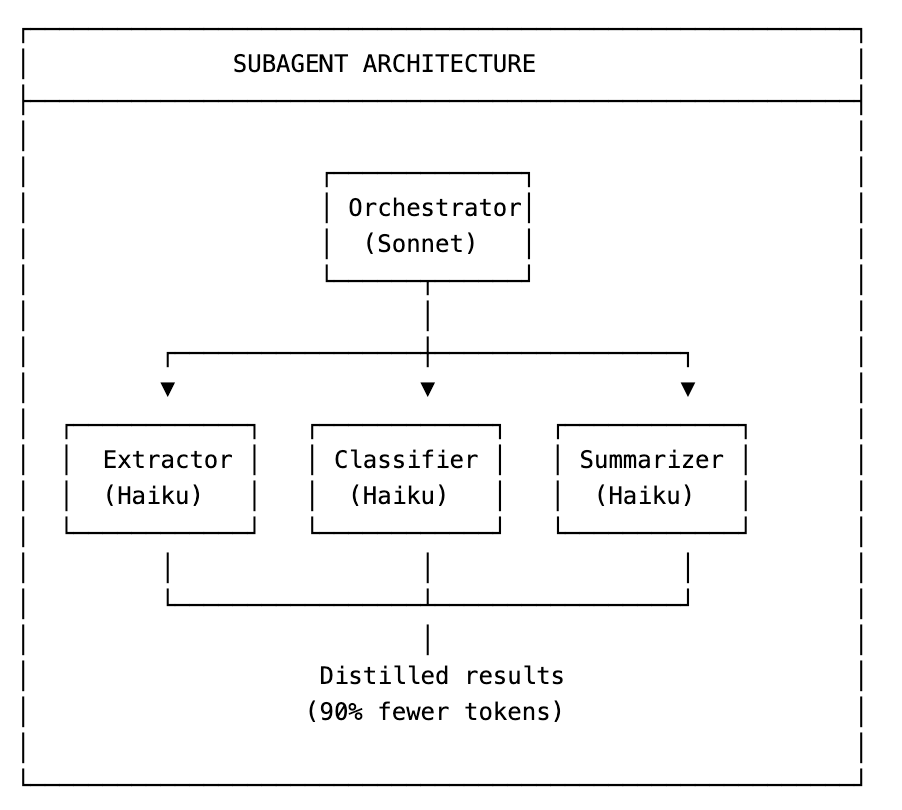

Delegate to Cheaper Subagents (Offshore to Tax Havens)

Not every task needs your most expensive model.

The Claude Code subagent pattern processes 67% fewer tokens overall due to context isolation. Instead of stuffing every intermediate search result into a single global context, workers keep only what’s relevant inside their own window and return distilled outputs.

Tasks perfect for cheaper subagents:

Data extraction: Pull specific fields from documents

Classification: Categorize emails, documents, or intents

Summarization: Compress long documents before main agent sees them

Validation: Check outputs against criteria

Formatting: Convert between data formats

The orchestrator sees condensed results, not raw context. This prevents hitting context limits and reduces the risk of the main agent getting confused by irrelevant details.

Scope subagent tasks tightly. The more iterations a subagent requires, the more context it accumulates and the more tokens it consumes. Design for single-turn completion when possible.

Reusable Templates Over Regeneration (Standard Deductions)

Every time an agent generates code from scratch, you’re paying for output tokens. Output tokens cost 5x input tokens with Claude. Stop regenerating the same patterns.

Our document generation workflow used to be painfully inefficient:

OLD APPROACH:

User: “Create a DCF model for Apple”

Agent: *generates 2,000 lines of Excel formulas from scratch*

Cost: ~$0.50 in output tokens alone

NEW APPROACH:

User: “Create a DCF model for Apple”

Agent: *loads DCF template, fills in Apple-specific values*

Cost: ~$0.05

The template approach:

Skill references template: dcf_template.xlsx in /public/skills/dcf/

Agent reads template once: Understands structure and placeholders

Agent fills parameters: Company-specific values, assumptions

WriteFile with minimal changes: Only modified cells, not full regeneration

For code generation, the same principle applies. If your agent frequently generates similar Python scripts, data processing pipelines, or analysis frameworks, create reusable functions:

# Instead of regenerating this every time:

def process_earnings_transcript(path):

# 50 lines of parsing code...

# Reference a skill with reusable utilities:

from skills.earnings import parse_transcript, extract_guidance

The agent imports and calls rather than regenerates. Fewer output tokens, faster responses, more consistent results.

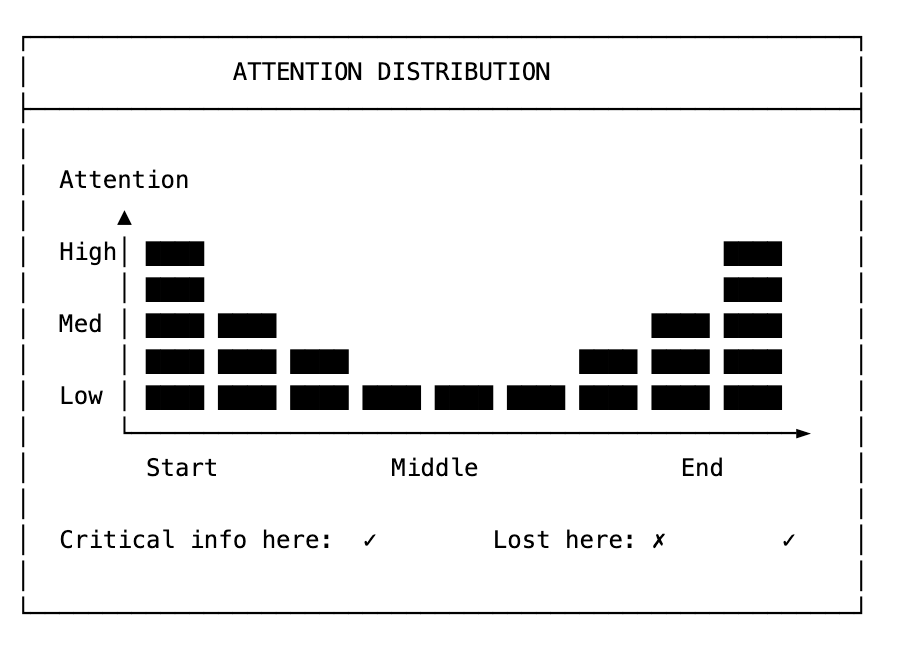

The Lost-in-the-Middle Problem

LLMs don’t process context uniformly. Research shows a consistent U-shaped attention pattern: models attend strongly to the beginning and end of prompts while “losing” information in the middle.

Strategic placement matters:

System instructions: Beginning (highest attention)

Current user request: End (recency bias)

Critical context: Beginning or end, never middle

Lower-priority background: Middle (acceptable loss)

For retrieval-augmented generation, this means reordering retrieved documents. The most relevant chunks should go at the beginning and end. Lower-ranked chunks fill the middle.

Manus uses an elegant hack: they maintain a todo.md file that gets updated throughout task execution. This “recites” current objectives at the end of context, combating the lost-in-the-middle effect across their typical 50-tool-call trajectories. We use a similar architecture at Fintool.

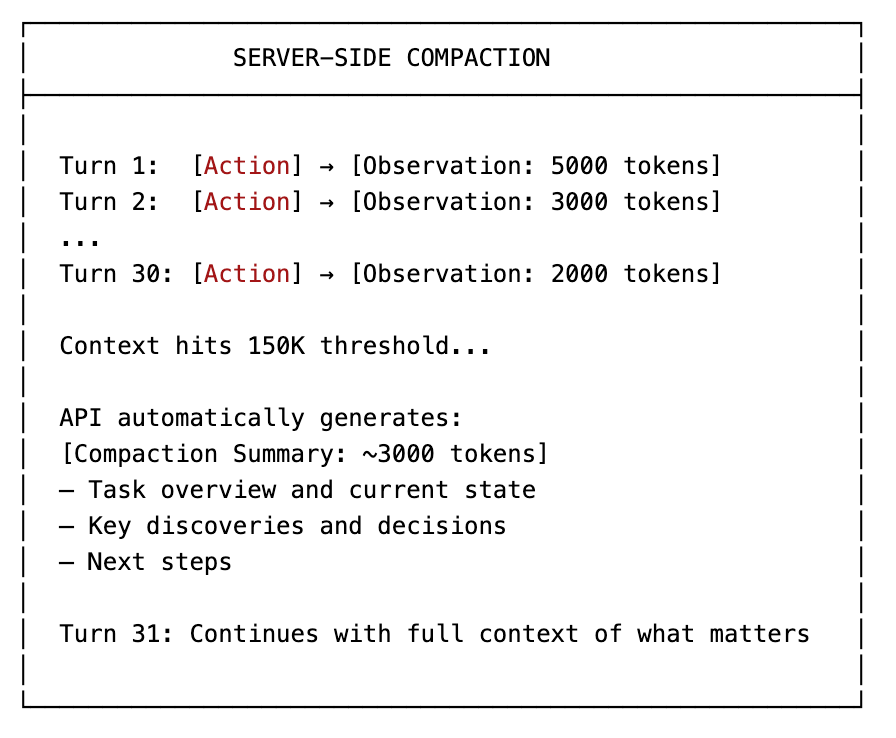

Server-Side Compaction (Depreciation)

As agents run, context grows until it hits the window limit. You used to have two options: build your own summarization pipeline, or implement observation masking (replacing old tool outputs with placeholders). Both require significant engineering.

Now you can let the API handle it. Anthropic’s server-side compaction automatically summarizes your conversation when it approaches a configurable token threshold. Claude Code uses this internally, and it’s the reason you can run 50+ tool call sessions without the agent losing track of what it’s doing.

The key design decisions:

Trigger threshold: Default is 150K tokens. Set it lower if you want to stay under the 200K pricing cliff, or higher if you need more raw context before summarizing.

Custom instructions: You can replace the default summarization prompt entirely. For financial workflows, something like “Preserve all numerical data, company names, and analytical conclusions” prevents the summary from losing critical details.

Pause after compaction: The API can pause after generating the summary, letting you inject additional context (like preserving the last few messages verbatim) before continuing. This gives you control over what survives the compression.

Compaction also stacks well with prompt caching. Add a cache breakpoint on your system prompt so it stays cached separately. When compaction occurs, only the summary needs to be written as a new cache entry. Your system prompt cache stays warm.

The beauty of this approach: context depreciates in value over time, and the API handles the depreciation schedule for you.

Output Token Budgeting (Withholding Tax)

Output tokens are the most expensive tokens. With Claude Sonnet, outputs cost 5x inputs. With Opus, they cost 5x inputs that are already expensive.

Yet most developers leave max_tokens unlimited and hope for the best.

# BAD: Unlimited output

response = client.messages.create(

model=”claude-sonnet-4-20250514”,

max_tokens=8192, # Model might use all of this

messages=[...]

)

# GOOD: Task-appropriate limits

TASK_LIMITS = {

“classification”: 50,

“extraction”: 200,

“short_answer”: 500,

“analysis”: 2000,

“code_generation”: 4000,

}

Structured outputs reduce verbosity. JSON responses use fewer tokens than natural language explanations of the same information.

Natural language: “The company’s revenue was 94.5 billion dollars,

which represents a year-over-year increase of 12.3 percent compared

to the previous fiscal year’s revenue of 84.2 billion dollars.”

Structured: {”revenue”: 94.5, “unit”: “B”, “yoy_change”: 12.3}

For agents specifically, consider response chunking. Instead of generating a 10,000-token analysis in one shot, break it into phases:

Outline phase: Generate structure (500 tokens)

Section phases: Generate each section on demand (1000 tokens each)

Review phase: Check and refine (500 tokens)

This gives you control points to stop early if the user has what they need, rather than always generating the maximum possible output.

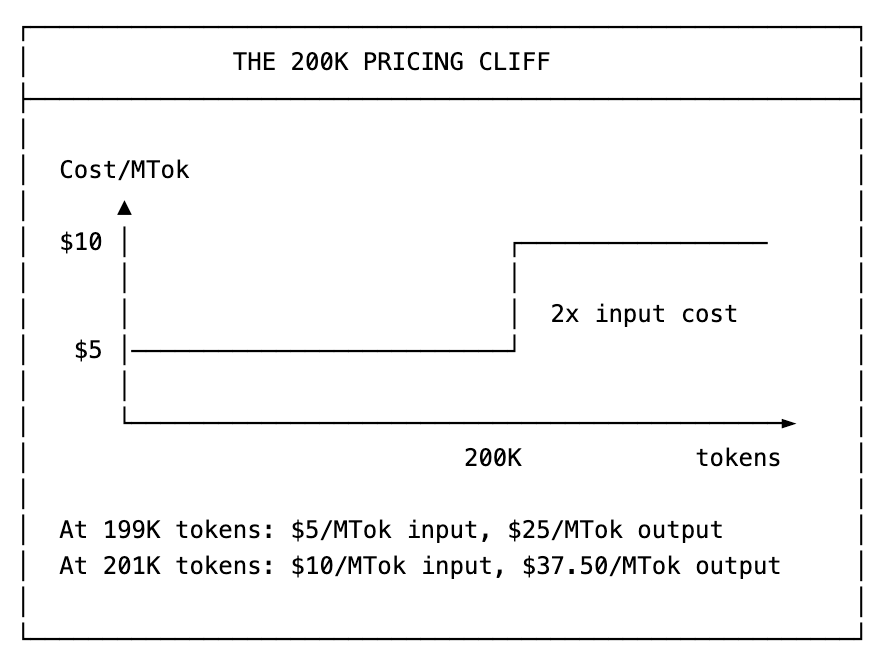

The 200K Pricing Cliff (The Tax Bracket)

With Claude Opus 4.6 and Sonnet 4.5, crossing 200K input tokens triggers premium pricing. Your per-token cost doubles: Opus goes from $5 to $10 per million input tokens, and output jumps from $25 to $37.50. This isn’t gradual. It’s a cliff.

This is the LLM equivalent of a tax bracket. And just like tax planning, the right strategy is to stay under the threshold when you can.

For agent workflows that risk crossing 200K, implement a context budget. Track cumulative input tokens across tool calls. When you approach the cliff, trigger aggressive compression: observation masking, summarization of older turns, or pruning low-value context. The cost of a compression step is far less than doubling your per-token rate for the rest of the conversation.

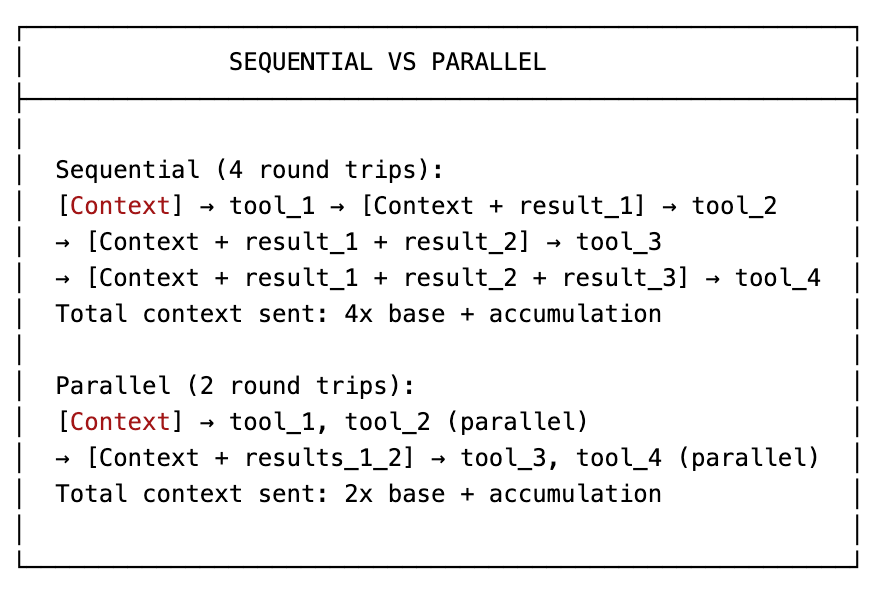

Parallel Tool Calls (Filing Jointly)

Every sequential tool call is a round trip. Each round trip re-sends the full conversation context. If your agent makes 20 tool calls sequentially, that’s 20 times the context gets transmitted and billed.

The Anthropic API supports parallel tool calls: the model can request multiple independent tool calls in a single response, and you execute them simultaneously. This means fewer round trips for the same amount of work.

The savings compound. With fewer round trips, you accumulate less intermediate context, which means each subsequent round trip is also cheaper. Design your tools so that independent operations can be identified and batched by the model.

Application-Level Response Caching (Tax-Exempt Status)

The cheapest token is the one you never send to the API.

Before any LLM call, check if you’ve already answered this question. At Fintool, we cache aggressively for earnings call summarizations and common queries. When a user asks for Apple’s latest earnings summary, we don’t regenerate it from scratch for every request. The first request pays the full cost. Every subsequent request is essentially free.

This operates above the LLM layer entirely. It’s not prompt caching or KV cache. It’s your application deciding that this query has a valid cached response and short-circuiting the API call.

Good candidates for application-level caching:

Factual lookups: Company financials, earnings summaries, SEC filings

Common queries: Questions that many users ask about the same data

Deterministic transformations: Data formatting, unit conversions

Stable analysis: Any output that won’t change until the underlying data changes

The cache invalidation strategy matters. For financial data, earnings call summaries are stable once generated. Real-time price data obviously isn’t. Match your cache TTL to the volatility of the underlying data.

Even partial caching helps. If an agent task involves five tool calls and you can cache two of them, you’ve cut 40% of your tool-related token costs without touching the LLM.

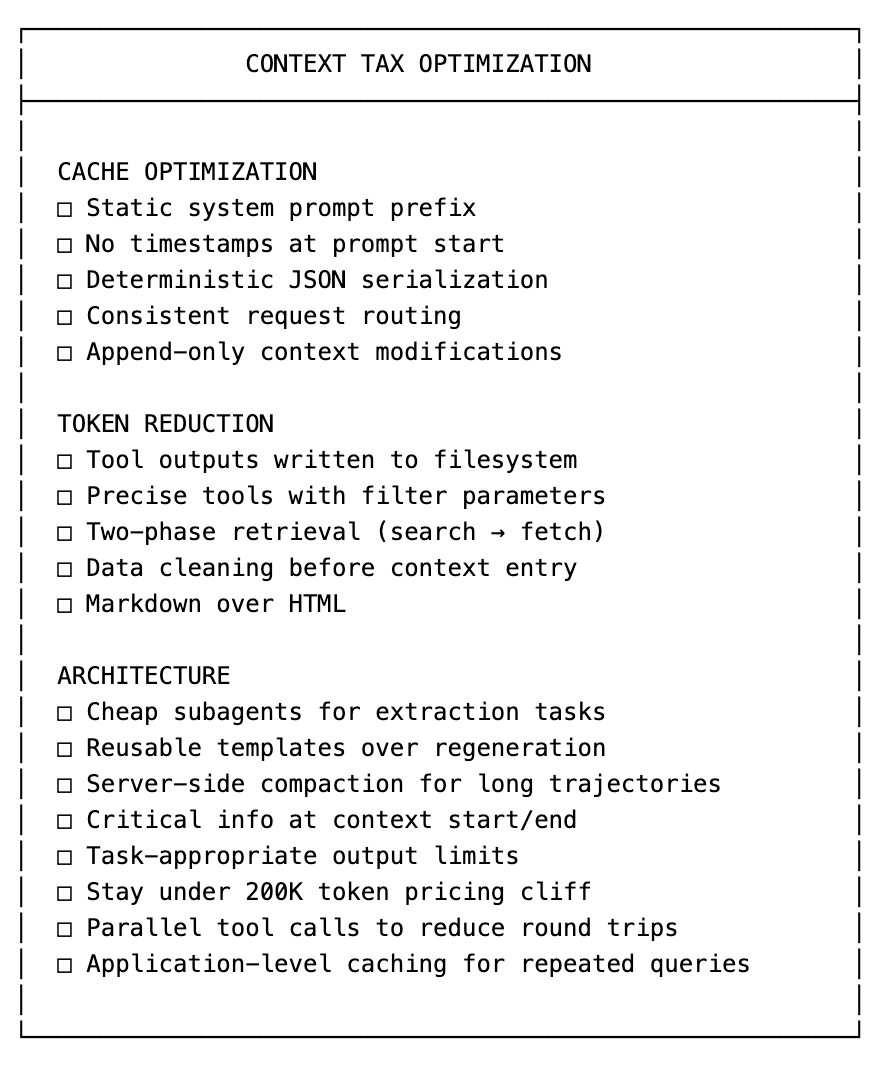

The Tax Avoidance Checklist to avoid the IRS of LLMs.

The Meta Lesson

Context engineering isn’t glamorous. It’s not the exciting part of building agents. But it’s the difference between a demo that impresses and a product that scales with decent gross margin.

The best teams building sustainable agent products are obsessing over token efficiency the same way database engineers obsess over query optimization. Because at scale, every wasted token is money on fire.

The context tax is real. But with the right architecture, it’s largely avoidable.